Turn my blog feed into a QA dataset to fine-tune a LLM

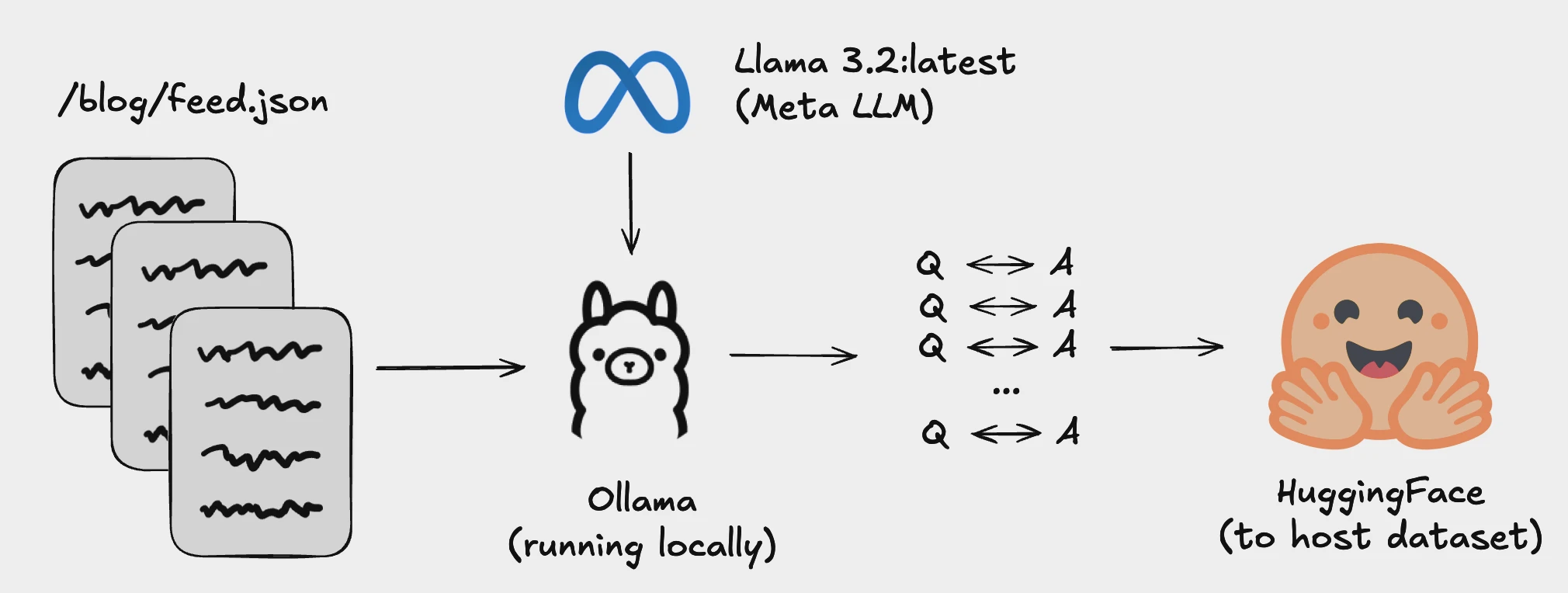

This project converts blog feed content into a structured Question-Answer dataset using LLaMA 3.2 (via Ollama) for local processing. The generated dataset follows a conversational format and can be automatically pushed to Hugging Face.

The open source code is available here.

I was looking to fine-tune an open source LLM with content that I have produced in the past to see how advanced such LLMs were and how close I could get a model running locally to "output tokens" the same way I would.

According to Daniel Kahneman and his book Thinking, Fast and Slow, humans have two modes of thought:

- System 1: Fast, instinctive and emotional. An example of this are my posts on X.

There are multiple libraries out there to scrape data from X. One that I used recently, and liked (without requiring an X API key) was Twitter scraper finetune from ElizaOS.

- System 2: Slower, more deliberative and more logic. An example of this is my blog, where some of these posts take me several hours to write and need to sleep on the topic before pushing.

For this, I didn't find any good out-of-the-box library that allowed me to convert my posts into a QA dataset to fine-tune a model.

So this is what I ended up building.

Getting Started

In order to do this you will need:

- Python 3.11

- Poetry (for python dependencies)

- Ollama (to run Llama 3.2)

- Hugging Face account (for dataset upload)

and obviously your blog in a JSON feed like https://didierlopes.com/blog/feed.json.

1. Install dependencies

poetry install

poetry run python -m spacy download en_core_web_sm

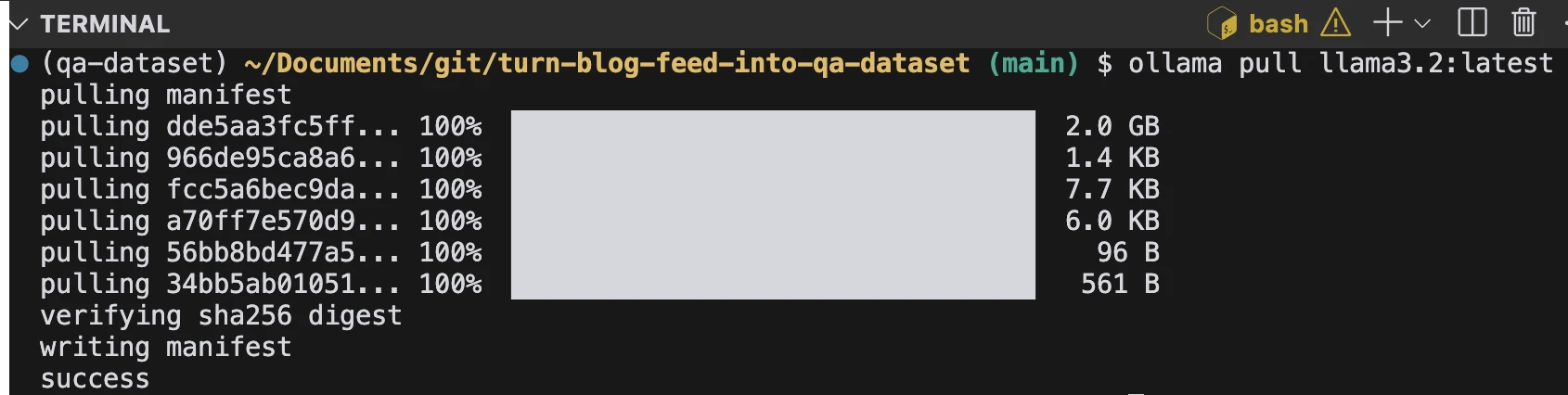

2. Install Ollama and pull Llama 3.2

Follow instructions to install Ollama: https://ollama.com/

Select a model to run locally using https://ollama.com/search.

In this case, we want to run llama3.2:latest (https://ollama.com/library/llama3.2).

ollama pull llama3.2:latest

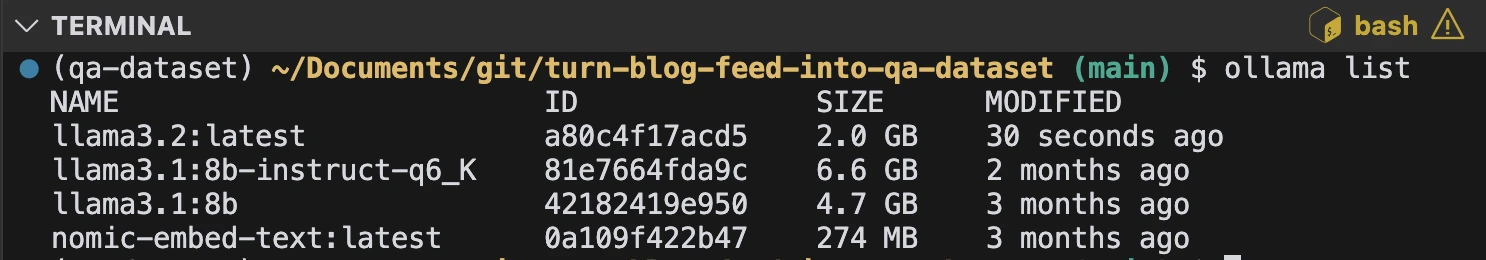

Then, we can check that the model has been downloaded with:

ollama list

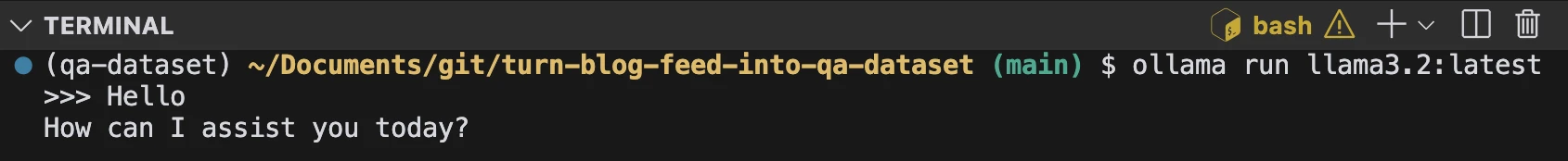

Finally, we can test that it works with:

ollama run llama3.2:latest

3. Configure Hugging Face

-

Create a write-enabled token at Hugging Face

-

Create a

.envfile:

HF_TOKEN=your_token_here

Usage

1. Update the blog feed URL in this notebook.

Below you can see the feed structure being used - which is the default coming from Docusaurus, which is the framework I'm using to auto-generate the feed for my personal blog.

url = "https://didierlopes.com/blog/feed.json"

JSON Feed Structure

{

"version": "https://jsonfeed.org/version/1",

"title": "Didier Lopes Blog",

"home_page_url": "https://didierlopes.com/blog",

"description": "Didier Lopes Blog",

"items": [

{

"id": "URL of the post",

"content_html": "HTML content of the post",

"url": "URL of the post",

"title": "Title of the post",

"summary": "Brief summary of the post",

"date_modified": "ISO 8601 date format",

"tags": [

"array",

"of",

"tags"

]

},

// ... more items

]

}

2. Set your Hugging Face dataset repository name:

dataset_repo = "didierlopes/my-blog-qa-dataset"

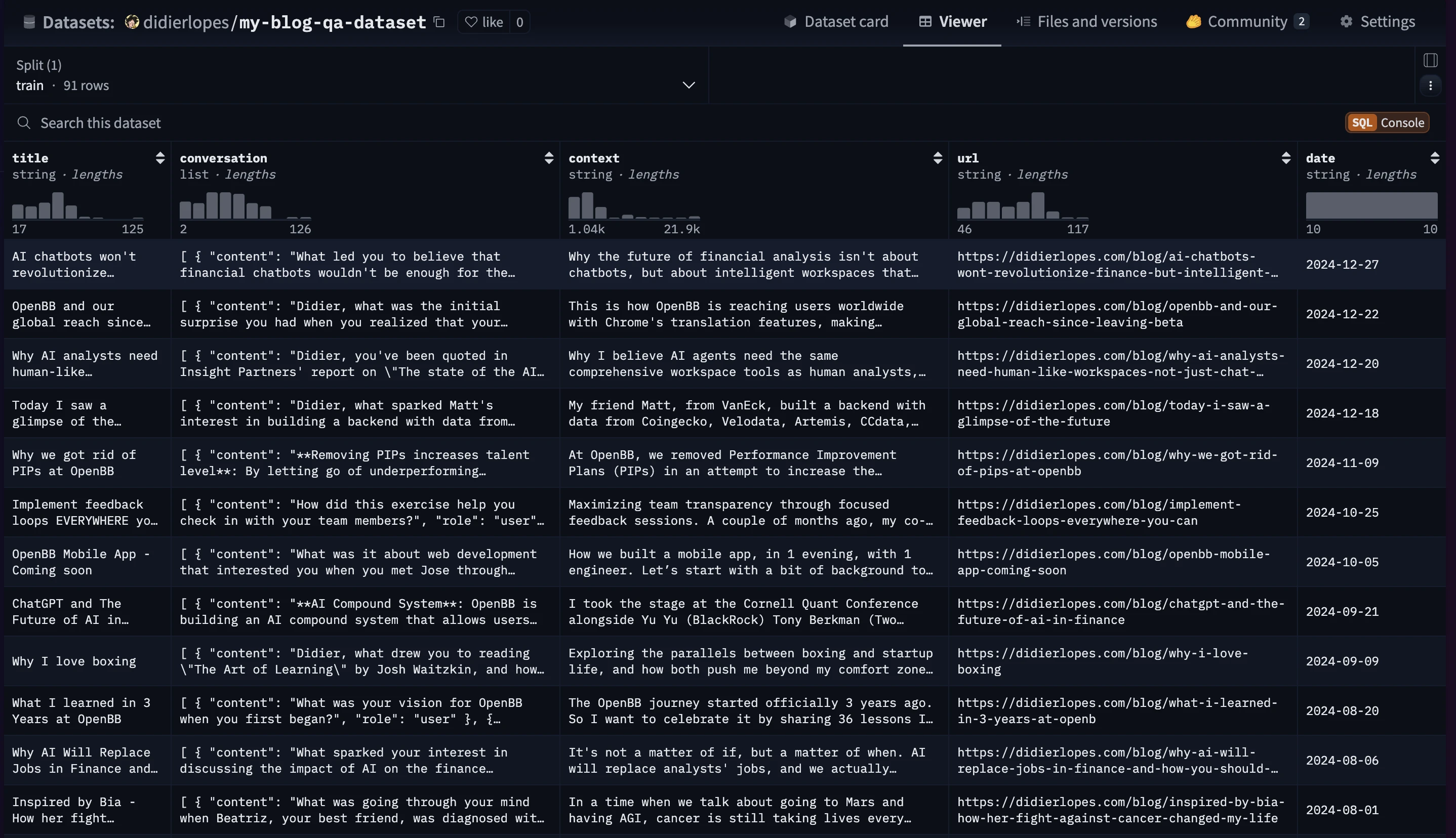

This is what the dataset will look like in HuggingFace: https://huggingface.co/datasets/didierlopes/my-blog-qa-dataset/viewer.

3. Run the notebook cells sequentially.

The notebook contains detailed explanations throughout to guide you through the process step-by-step.

Dataset Format

The generated dataset includes:

title: Blog post titleconversation: Array of Q&A pairs in role-based formatcontext: Original cleaned blog contenturl: Source blog post URLdate: Publication date

Note: This is the format of the conversation field:

conversation = [

{

"role": "user",

"content": (

"You mentioned that when ChatGPT launched, everyone rushed to build "

"financial chatbots. What were some of the fundamental truths that "

"those who built these chatbots missed?"

)

},

{

"role": "assistant",

"content": (

"Those building financial chatbots missed two fundamental truths:"

"1. AI models are useless without access to your data."

"2. Access to data isn't enough - AI needs to handle complete "

"workflows, not just conversations."

"These limitations led to chatbots that can't access proprietary "

"data, can't handle complex workflows and restrict analysts to an"

"unnatural chat interface."

)

},

# ... more Q&A pairs following the same pattern

]

Summary of how it works

- Fetches blog content from JSON feed

- Cleans HTML to markdown format

- Analyzes sentence count to determine Q&A pair quantity

- Generates contextual questions using LLaMA 3.2 running locally

- Creates corresponding answers

- Filters and removes duplicate Q&A pairs

- Formats data for Hugging Face

- Pushes to Hugging Face Hub